At the recent San Francisco Stackoverflow DevDays I had the opportunity to sit through two presentations, each from the two of the biggest players in mobile OS platforms, Apple (iPhone), and Google (Android). I will start out with the highlights from the iPhone talk. Read the rules for developing on the iPhone and see if they sound friendly and inviting:

- You have to learn a 20+ year old programming language with a toolkit that is almost as old

- You must follow all our programming and UI guidelines to the letter when developing your application. Oh, and we mean it!

- You must give us 30% of the profit you make from selling your application

- We can reject your application for any number of reasons you might not like

- Your application will only run ever be able to run on one kind of phone. Adios portability!

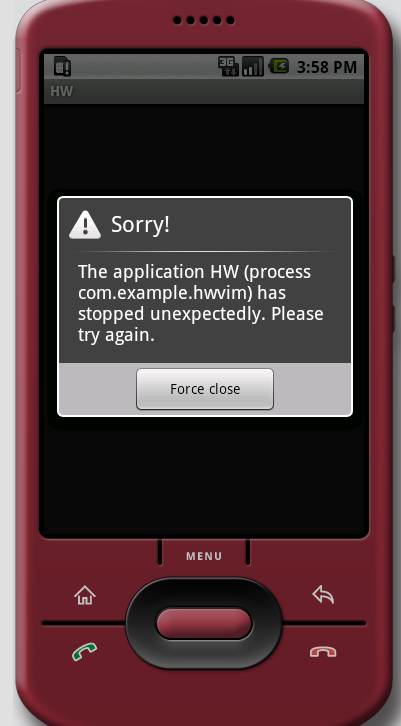

Where do I sign up? No seriously, where?! (BTW great talk Rory Blyth) Believe it or not these rules are exactly why Apple's long term iPhone vision is paying off and Google's never will. This lock down policy is why the experience of using an iPhone is so consistent. Why the battery life seems to remain steady despite me adding 10 new apps. Why different gestures like sliding a panel, toggling a switch, and entering text is consistent in every application. Most importantly, why I have never seen the dialog box like this.

I don't want see dialog boxes like that. Especially when my only option is "Force close". As oppose to what? There is only one choice!!! Just make it for me, hide the whole ordeal, and let me move on.

During the Android talk the representative from Google referred to this as "The error dialog which G1 owners should all be familiar with now". A huge ugly dialog box. Shit happens, force close, but try again mate! He explained to the room that this wasn't Android's fault. It was due to Android application programmers who didn't understand threading. Threading of all things! He remind us that the best thing about Android was their marketplace didn't have draconian rules and guidelines like those meanies over at Apple. Anyone can make an application for Android, and we all know when everyone gets to play, no one wins.

Which brings me back to my intro. You see, Apple understand the economics of the crazy way humans work. Programmers are going to write crappy code and programmers don't like big corporations telling them what they can and can't do. We know best! We must have error dialogs! How dare you not let me use Java, Ruby, or the language of the month to write my iPhone app! We must have our application work on all 39 different models because thats more sales! This should all be obvious!!!

And yet it's all wrong. What we want is not always what is good for us.

In order to create a consistent phone experience, in order to have it work reliability (ok, forget AT&T for this argument I'm talking about Apple's role), in order for the battery to have a decent lifespan, in order to sell tons of applications in an unified consistent manor, they had to do the very things that should of turned developers away. And it worked. And now people are lining up to give Apple 30% of their profits, just to get in on the only mobile platform in town.